Here are our ten suggestions that may help you to implement a quality monitoring programme that best influences advisor performance.

1. Define Your Quality Standards From Your Customers’ Perspective

The “father” of quality management is considered by many to be Edwards Deming, which is interesting as Deming was best known for being an engineer and statistician.

Why is Edwards Deming held in such high regard in the contact centre industry? It is because his definition of quality, as something with three primary features, is the basis of many quality management programmes. These three features are:

- Core attributes: predictable, uniform, dependable, consistent

- Based on: standards

- From the perspective of: the customer

Applying this definition to the contact centre, Mark Ungerman, Director of Product Marketing at NICE inContact, says: “If we want predictable customer outcomes, then we really need to have a well-defined process, because if advisors aren’t given the same support, you’re going to get varying results. This means that we don’t achieve a great ‘uniform’ service.”

But when you do achieve uniformity, how do you know if the result is good or bad quality? What we need is a measuring stick by which we can determine whether or not our scores are good or bad.

This leads us to the final considerations: where and how do we set our targets? And who determines those targets?

Mark says: “If we reflect back on what Deming said, it’s the customer that sets our targets. But sometimes it will be management that sets our targets, not necessarily our customers. So, we may be measuring the wrong things when we consider our quality management programmes.”

2. Build Customer Satisfaction Into Your Quality Scorecard

The factors that drive Customer Satisfaction (CSat) should be built into your quality monitoring scorecards, so that you can measure quality from the customer’s perspective. However, contact centres often don’t specify their key drivers of CSat and instead measure an overall score.

So, we need to investigate which “service dimensions” have the greatest influence on your overall score, which you can do by surveying willing customers. This survey will include basic questions such as “What do you think are the key factors to great customer service?”

Once you have done this, you will have an idea of which “service dimensions” are of the greatest importance to your customers. This will be very useful when you think about how your quality scorecard should be weighted.

As Tom Vander Well, CEO of Intelligentics, says: “By identifying these drivers of satisfaction, we can assess the quality scorecard and give more weight to the behaviours that are associated with the most important drivers – as identified by our customers.”

To find out more about using CSat scores to improve quality, read our article: How to Get More From Your Customer Satisfaction Scores

3. Ensure Scorecards Monitor Things That Are Within the Advisor’s Control

Quality scorecards need to centre around what is in the advisor’s control, i.e. “what I say” and “how I say it”. So, to score advisors in the fairest way possible, it is good to know how an advisor’s words and behaviours influence your key drivers of satisfaction.

For example, you may have identified resolution as a key driver of satisfaction, yet resolution is very much dependent on what information advisors have and what their systems allow them to do. So advisors may be constrained.

But they can influence resolution in a couple of different ways and it is these different ways that need to be monitored.

One example is making an ownership statement. For instance, if a customer phones in and asks: “Can I check on the status of my order?” an example of an ownership statement would be: “I would be happy to check on that for you. Could I have your order number please?” That would leave a positive impression in the customer’s mind and demonstrate to them that the advisor was taking ownership of the customer’s query/problem and doing their best to find a resolution.

Another example would be making a complete effort. Tom Vander Well believes that this should be a key part of the criteria for resolution, saying: “We worked with one contact centre where when a customer asked via email: ‘Can you reset my password?’, or something to that effect, the advisors were very quickly responding with: ‘What’s your user ID?’”

“So, what’s happening is they’re putting the burden back on the customer to then respond with the user ID, even though if the advisor simply looked up that customer in a different way, they could find that user ID themselves.”

“This was largely happening because the contact centre liked to monitor how many emails an advisor was sending every day, but it went against the interests of speedy resolution. So making a complete effort is a behaviour that is in the advisor’s control and aids resolution, meaning that it’s good to include it in quality criteria, if resolution is a key driver of your satisfaction.”

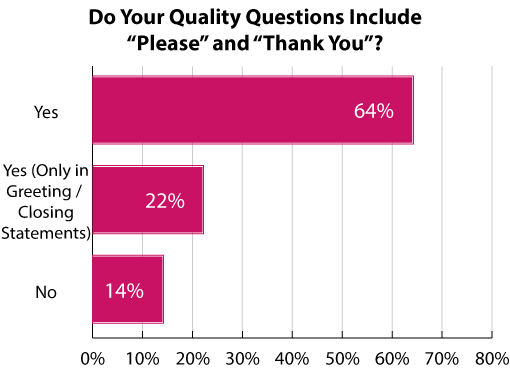

4. Monitor Basic “Pleases” and “Thank Yous”

When you look at what is driving your customer satisfaction, you will likely find that courtesy and kindness will be a key driver. But almost all advisors are courteous on the phone, right? Well, that might not be the case.

What Tom Vander Well has found through his CSat data, across various different contact centres, is that: “As more and more millennials get into the workforce at contact centres, the number of advisors that were just raised with the understanding of simple courtesies like ‘please’ and ‘thank you’ is diminishing.”

In fact, Tom’s company Intelligentics ran a pilot assessment for a client last year, with 20 advisors, most of whom were in their twenties. This assessment looked into whether these advisors said “please” or “thank you” when they asked for an account number or verification information.

According to Tom: “What we found was that in only 6% of phone calls to the customer did we ever hear a ‘please’ or a ‘thank you’ when the customer was asking for these two bits of information. This was the lowest I’d seen in 25 years of running the assessment.”

While this may seem like a rare example, we found that 14% of contact centres don’t include courtesy-based criteria in their quality monitoring programmes – as our poll suggests below. So, by not assessing advisors on basic “pleases” and “thank yous”, lack of courtesy may be a widespread issue.

Commenting on the poll, Tom says: “We’ve now got to a stage where basic courtesy represents a key opportunity to differentiate yourself from your competitors.”

“This is because if your competitors are not measuring or continuously coaching courtesy at all, all of a sudden your customers find that you’re providing a level of courtesy that they haven’t received elsewhere.”

To find out more about courtesy in the contact centre, read our article: The Best Courtesy Words and Phrases to Use in Customer Service

5. Consider Quality Beyond the Advisor Level

Measuring quality should go beyond what’s in the advisor’s control and what they are scored for. Quality analysts will ideally be taking notice when they hear the customer say “this is the fourth time I’m calling about this issue” and think about how advisors can be better supported to get the answer right first time.

Also, the contact centre really should be listening to their advisors, who will say things such as: “I couldn’t get the right information, because I didn’t have access to the necessary system.” In these situations, ask yourself “Why not?” Advisors will hear customer complaints and tell you what needs to be improved, in terms of quality, that is outside of their control.

As an example, here Tom Vander Well provides a quick list of things that are outside an advisor’s control which have a big impact on call quality and need to be monitored/tested.

- How easy is it to access the necessary information?

- How quick is it to access the necessary information?

- Do we have enough staff in place, so that queue times are satisfactory?

- Are we giving advisors the appropriate, continuous, training?

- Are we giving advisors standard lead times for how long certain processes take, which they can then pass on to the customer?

- Is the IVR voice and message courteous?

This final question is particularly interesting, as how friendly and easy the IVR is, is often key to customer satisfaction; first impressions really do count.

As Tom says: “Most contact centres and companies don’t think at all about the message on their IVR. So often it is robotic and sounds as if somebody from IT quickly pressed record and left a very basic first message, which does not make for a great first impression.”

“So, hire somebody, get a voice actor and hire a writer to create a really fun script that sounds great and gives that positive first impression, which improves overall call quality.”

6. Research Exceptional Situations

If you address exceptional issues systematically, you can help to reduce customer dissatisfaction, according to Tom Vander Well.

The first step of this is to find contact types where satisfaction is low. So you could either split your customer satisfaction score across contact reasons or assess your customer complaints and look for patterns as to which contact types get the most complaints.

Then, as Tom says: “For contact types with low satisfaction rates, look at every call that took an extraordinary amount of time, instead of your Average Handle Time (AHT).”

“So, for example, let’s pull 30 calls, for the same contact reason, that took over ten minutes to complete and listen to all of them, to figure out why. We want to systemically see some trends.”

By spending the time to research these exceptions, instead of choosing random calls for monitoring quality, you will be more likely to find ways of improving quality and therefore satisfaction.

However, be sure that you do not target advisors on AHT and that you only use timings for your research. Otherwise, advisors may pick up some behaviours that have an adverse effect on satisfaction.

For more on the dangers of AHT, read our article: Want to Increase Customer Satisfaction? Stop Measuring Average Handling Time!

7. Monitor Efficiency in an Appropriate Way

As mentioned above, targeting advisors on AHT can be bad practice, but we still find that there are contact centres that will score an advisor down if their call lasts for over a certain amount of time.

Better practice, to ensure that advisors are working in a time-effective manner, is to look at handling times across a large time span, according to Tom Vander Well.

Tom says: “You can find a nice balance by evaluating advisor performance over a large data set. So, if someone’s AHTs are consistently higher than average, you may want to look into why that is happening.”

However, we do understand that the contact centre is often an area that is pressured to cut costs by the rest of the business, who may view the contact centre as a factory-type of establishment, in terms of how it handles contacts. While this is certainly not the case, they may like to see evidence of how you build efficiency into your scorecards. So, Tom does have another option.

“It’s also possible to have an element in your scorecard such as ‘efficiently manages the length of the call’.”

“For example, if the call could have been three minutes in length, but it took the advisor 15 minutes, because they went to the wrong systems and weren’t looking in the right place, that’s a valid reason to assess advisors and coach them further.”

However, if you include criteria like this in your scorecard, make sure you back it up with regular calibration. Otherwise, it becomes subjective, and one analyst may score the call differently from another.

To find some more advice from Tom about how best to calibrate quality scores, read our article: How to Calibrate Quality Scores

3 More Tips From Our Readers

We reached out to our readers for their top tips on how to improve contact centre quality monitoring and received many great suggestions.

So we picked out our favourite three, which are highlighted below.

8. Feedback Scorecards and Call Recordings to Advisors

Feedback scorecards to advisors with comments and recordings. These comments can include constructive feedback as well as “well done”, and the recording will help the advisor to see if their view of quality matches your own.

Self-assessment is also a good method for aligning your views of quality with the advisor’s.

When you allow people to score and judge themselves, nine times out of ten, you (as the quality analyst) end up building up the advisor’s confidence, as they’ve been so critical of themselves and really want to do a good job.

So, self-assessment is great in two ways as it encourages advisors to think for themselves about their performance, while making sure they know what we’re looking for in terms of quality.

Contributed by: Lisa

9. Involve Advisors in Creating the Scorecard

We’ve devised eight best-practice principles that are used to measure scorecards and advisor performance. These are set by managers, who account for what matters to the customer and what matters to the business.

Advisors are then given the opportunity to determine which six of the eight criteria should be given most weight. That way, both the business and our advisors contribute towards a balanced scorecard, which they have built together.

Contributed by: Matthew

Find out about how quality tools can help you build and automate scorecards in our article: 11 Tips and Tools to Improve Call Centre Quality Assurance

10. Tell Advisors What They Should Continue to and Begin to Do

In the summary of our feedback documents, I always use the “continue to and begin to method”.

The “continue to” elements are all about the strengths that the advisor has shown. For instance, transparency, call control and reaching resolution.

The “begin to” elements will highlight areas where improvement is required, and then a timescale is given by which to implement the changes.

This way of thinking was developed by Nick Drake-Knight, Director of Continue and Begin, who shares more in the video below:

We also like to use soft skills as part of our quality parameter and call them “core skills”. These include things like empathy, enthusiasm, recognise objections, motivation, positivity, tolerance, integrity, politeness and loads more.

Contributed by: Susan

Find out more about Nick Drake Knight‘s coaching techniques in the following episode of The Contact Centre Podcast.

The Contact Centre Podcast – Episode 9:

Call Center Coaching: How To Sustain Learning And Make It Fun!

For more information on this podcast visit Podcast – Contact Centre Coaching: How to Sustain Learning and Make it Fun!

For more on the topic of improving contact centre quality, read our articles:

- Call Centre Quality Parameters: Creating the Ideal Scorecard and Metric

- 30 Tips to Improve Your Call Quality Monitoring

- How to Assess Quality on Email and Live Chat in the Contact Centre

Author: Charlie Mitchell

Reviewed by: Robyn Coppell

Published On: 5th Dec 2018 - Last modified: 19th Feb 2026

Read more about - Hints and Tips, Charlie Mitchell, Customer Satisfaction (CSAT), Editor's Picks, Mark Ungerman, NiCE CXone, Nick Drake-Knight, Quality, Tom Vander Well