Does this make sense? Customer Satisfaction Score (CSAT) is a key metric used in contact centres and BPOs to measure how satisfied customers are with a product, service, or interaction.

In this article, we explain how to calculate a Customer Satisfaction Score (CSAT Score) and investigate how you can use your CSAT Score to drive value for your organization.

What is a CSAT Score?

A Customer Satisfaction Score is a metric that reflects how customers feel about a specific interaction, product, or service. It is typically measured through survey responses, where customers rate their satisfaction on a scale, and the scores are then averaged to provide an overall picture of customer sentiment.

This score offers valuable insights to organisations, helping them benchmark performance, identify areas for improvement, and enhance customer retention by ensuring their offerings align with customer expectations.

What Are the Pros and Cons of Calculating a CSAT Score?

Whilst this approach does allow you to capture feedback quickly from a proportionately higher percentage of your customer base, the meaning behind a CSAT score can be difficult to gauge due to the sparse amount of detail shared.

Also, as you’ll never be able to get every customer to take the survey, you’ll always be lacking a complete picture.

What is a Good CSAT Score?

First and foremost, a good score is any score that is better than the score you received from your previous CSAT survey – as you continue on your CX journey to wow your customers.

Looking beyond your own contact centre however, a good benchmark is to consider anything between 75 and 85 as a good score, and anything above 90 as excellent.

You can also keep an eye on the American Customer Satisfaction Index for a broader idea of what’s typical sector by sector.

How to Calculate a CSAT Score

Many businesses are able to tailor CSAT measurements to the needs of their own business, but they do often measure it in very similar ways.

Here are three common ways used by contact centres and BPOs to calculate CSAT.

1. Average Score

One method is to ask customers: “On a scale of 1–10, how satisfied were you with our service today?” This is commonly referred to as the “CSAT Question”.

You can modify this question to move the focus away from your “service” to another part of your business – like your product – if you wish to do so.

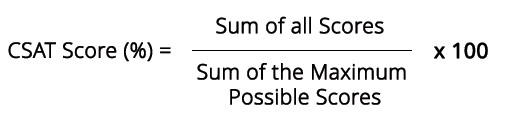

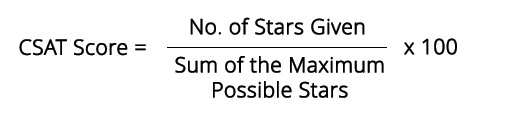

The CSAT Formula For Average Score

To calculate the average CSAT score, add up all the individual customer satisfaction scores and divide the total by the maximum possible score. Then, multiply the result by 100 to express it as a percentage.

With your CSAT Question, you can then use the formula below to calculate the mean average of all the scores.

CSAT SCORE (%) = (Sum of All Scores ÷ Sum of the Maximum Possible Scores) x 100

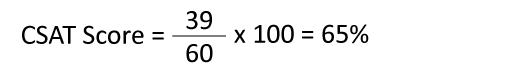

Example of an Average CSAT Score

Let’s say that we asked our question to six customers and they gave us the following responses.

| Respondent | Score between 1 and 10 | Maximum score |

|---|---|---|

| Respondent 1 | 5 | 10 |

| Respondent 2 | 7 | 10 |

| Respondent 3 | 10 | 10 |

| Respondent 4 | 3 | 10 |

| Respondent 5 | 8 | 10 |

| Respondent 6 | 6 | 10 |

| Total | 39 | 60 |

We would simply divide our total score of 39 by our total of 6 respondents and multiple that by 10:

CSAT Score = (39 ÷ 60) x 100 = 65%

The benefit of calculating your CSAT Score in this way is that, unlike with other scoring systems, you can calculate the average score while also capturing scores for individual customers.

With these individual scores you can place greater emphasis on score distribution. This helps project owners to investigate the common experiences of low-scoring customers, identifying key pain points – while you can also look to your high-scoring customers to help drive more value.

2. Happy vs Unhappy

Perhaps the easiest way to generate CSAT data is with a simple happy/neutral/unhappy question.

The results produced by this method do not need a large amount of analysis, and surveyors have the option of following up by asking customers about what could improve the score.

This method also manages to account for cultural differences better – research in Psychological Science has shown that individualistic cultures score more in the extremes, while other cultures score towards the middle.

This system doesn’t generate particularly nuanced data, but it can produce a quick health check on feelings towards a brand or service. Because it is so easy to link it to a graphical representation, it also reduces effort for the respondent.

Also, these simple visual scales can increase survey take-up, according to Morris Pentel, Chairman of The Customer Experience Foundation.

“When you use visual scales, the way in which your brain processes that is different than when you ask specific questions. Responses will be more subconscious and honest,” says Morris.

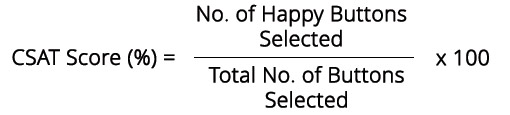

CSAT Formula For Happy vs Unhappy

To calculate the CSAT score for happy vs. unhappy responses, divide the number of “happy” responses by the total number of responses. Then, multiply the result by 100 to express it as a percentage.

To use this visual scale to calculate a CSAT Score, you would use the equation below:

CSAT Score (%) = (No. of Happy Buttons Selected ÷ Total No. of Buttons Selected) × 100

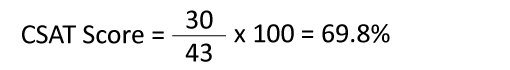

Example of Happy vs Unhappy

So, let say that you collect 43 responses from customers and 30 of those customers selected the happy button, your score would be 69.8%.

Why? Well, just take a look at the calculation below:

CSAT Score = (30 ÷ 43) x 100 = 69.8%

3. The Star Rating

You will notice that the equations for the first two ways of calculating a CSAT Score are very similar and that both will give you a percentage score.

Used by major services like Amazon and Netflix, the Star Rating system has the benefit of being familiar to customers.

Because it is so familiar to consumers, businesses can advertise the percentage of customers who gave them a five-star rating.

CSAT Formula For the Star Rating

To calculate the CSAT score for a star rating system, divide the total number of stars given by the maximum possible number of stars. Then, multiply the result by 100.

To calculate a CSAT Score using the star rating, you would use the formula below:

CSAT Score (%) = (No. of Stars Given ÷ Sum of the Maximum Possible Stars) × 100

Example of the Star Rating

This makes simple visual feedback very easy to produce, as seen in Amazon product listings:

In this example, there is a 4.2 out of 5 star CSAT Score. This score would have been calculated using the formula above.

This system can be particularly good if you’re looking to find how satisfied your customers are with a product. However, it is much less commonly used to calculate a score that revolves around customer service.

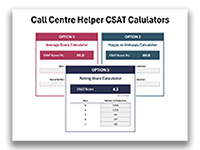

Download Free CSAT Calculators

Do you want to try our free CSAT Calculators?

Download our latest version of the free excel based Customer Satisfaction Calculators

How to Use Your CSAT Score

Don’t just think of CSAT as a “pat on the back” metric. Use the scores that you calculate to further improve customer service in each of the five ways below.

Internal Benchmarking

Tracking your CSAT Scores over time is an effective way to measure how the changes that you make within the contact centre and beyond impact customer satisfaction.

Just don’t become obsessed with improving your CSAT number and lose sight of business and employee needs. It’s best to think about improving processes and using CSAT as a gauge to how well you are doing so.

Quality Scores

Looking for correlation between quality scores and CSAT Scores is an important activity, to ensure that you are coaching advisors to use behaviours that result in high customer satisfaction.

If quality and customer satisfaction scores are not matching up, it’s time to take another look at your quality scorecard and analyse your key CSAT drivers. This will help you to make sure that your quality programme is meeting customer needs.

Routing

By collecting individual scores from customers, you can group your customers based on how happy they are.

You can then apply this information to your call-routing strategy, so the next time an unhappy customer calls, you can have a specialist advisor waiting for them. This will help to ensure that they have an improve experienced, which can turn a negative into a positive.

Customer Retention

As well as improving call routing, there are many more things that you can do to save a customer from “churning” if they leave a low satisfaction score.

The most powerful option is to call them back, solve their problem and blow their socks off with great service in the process. This may take some effort, but it can be very powerful to act on negative feedback. Not many businesses do it!

A Proactive Strategy

By grouping your customers into low and high satisfaction categories based on their feedback, you can implement a proactive strategy to boost revenue.

For instance, you can offer a discount to a low-satisfaction customer after they have provided negative feedback to retain their business. Similarly, you can strengthen relationships with high-satisfaction customers by offering tailored promotional deals.

Find out how to formulate a great proactive strategy that boosts CSAT by reading our article: What Is Proactive Customer Service? With a Definition, Examples and Key Challenges

How to Improve Your Customer Satisfaction Score

There are lots of ways in which organizations can improve their CSAT Scores. Whether that’s through better supporting their people, adapting their processes or updating their technology.

Three such ways are shared below by Paul Weald, The Contact Centre Innovator, who has found each idea to be really effective in improving customer satisfaction.

- Define the Right Culture – Based on a clear customer-orientated vision that you can communicate with your staff.

- Create an Emotional Connection – Recognize customer differences and remember: “It not what you say, it is how you say it.

- Use Customer-Orientated Measures – To benchmark the impact of the customer experience improvements that you make.

Find more ideas for improving your CSAT Score in our article: 31 Quick-Fire Tips: How to Improve Customer Satisfaction

The Pitfalls of the CSAT Score

The plan is to calculate a CSAT Score that provides an accurate representation of the happiness of your customer base. But there are certain pitfalls that may hold you back from achieving this aim…

Acting on Faulty Data

A basic principle for companies that want to solicit consumer feedback is to “act on what you learn”. If you’re not changing your strategy based on new information, why even bother to ask?

But there is another side to this. How do you know that what you’ve learnt is relevant? While it’s a problem to ignore good feedback, it’s much worse to act on unrepresentative feedback.

“A lot of customer service leaders worry about their response rates, but I emphasize two things that are much more important,” says Jeff Toister, The Service Culture Guide.

“One is representation. Does your survey sample (the people who respond) fairly represent the customers you want feedback from?”

“The second is usefulness. Do you get useful data that you can use to improve customer service?”

Here’s an example. Let’s say you want to survey customers who use a self-service feature on your website. If you only survey people who complete a certain transaction, you’ll completely miss anyone who tried to complete the function but gave up and tried calling customer service instead.

So a great response rate doesn’t necessarily mean you’re getting the data you need to improve.

To avoid falling into this trap, make sure you understand where your data is coming from, and whether there are any customer groups that it does not represent.

Part of the core process around implementing change should be answering these two questions:

- Who is this going to benefit?

- How do we know that?

Survey Fatigue

Between 1997 and 2018 the response rates for telephone surveys dropped from 36% to 6%, according to Pew Research Centre.

That number includes non-targeted surveys, so it’s not a direct parallel with the way businesses survey their customers. Nonetheless, it does tell us something about public willingness to engage.

The average consumer is invited to participate in three surveys a week, and fatigue easily sets in.

Businesses need to have clear rules on the frequency with which they will survey a customer, and the amount of time they are going to ask respondents to give up.

Teresa Gandy, Founder of ClarityCX, adds: “My rule of thumb is that no one customer will receive a survey more than every three months, because it just becomes trash in the mailbox. How much will their opinion have changed in a month?”

The bottom line is that customers are extremely unlikely to complete multiple surveys, and it actually impacts the customer experience, because they’re getting annoyed with you for sending surveys all the time.

Satisfaction Is Not Well Defined

What does a customer mean when they confirm they are satisfied? Does rating 5 out 5 mean they are fully happy or that they completely agree that they’re quite happy?

This is largely an issue of how a business chooses to word its survey questions and what it takes away from the responses.

Teresa Gandy thinks satisfaction might not be an adequate target: “I have big issues with using the word ‘satisfaction’. Satisfied customers will go to the competition when there’s an offer on – loyal ones won’t.”

Remember, what you want in a business are your loyal customers, your raving fans, because they’re the ones who will stay with you and tell everyone they know to give you their business as well.

External Benchmarking Is Tricky

Businesses have to choose the scoring method that best fits their survey goals. If they handle scoring internally they might create their own system entirely from scratch. If they use an established survey company, that company will have its own preference.

In practical terms, this makes it impossible for businesses to compare their results against their peers – as the CSAT question an organization asks will vary from one business to another. It can also make it challenging to communicate positive results to customers and stakeholders.

In addition, many contact centres fall into the trap of taking external benchmarking figures as “gospel”. Numbers are easy to manipulate and we need to be careful in how we interpret the figures that are being given to us.

For more advice on benchmarking CSAT and other metrics, read our article: Contact Centre Benchmarking – How to Get More From Your Metrics

Statistical Significance Can Change the Story

Without a very large surveyed group, individual surveys can have a big impact on scores. This might not be a problem for large companies that are able to poll thousands of people; for smaller companies, it could be a major difficulty.

This is really an issue with survey technique rather than the satisfaction metric itself. The area where this is most troubling is when customer feedback is used to measure individual members of staff.

In theory, basing targets and bonuses on individual CSAT seems very intuitive. The problems arise when only a few customers respond. Scores which are fractionally different can have an impact on agent confidence, even though they may be random fluctuations.

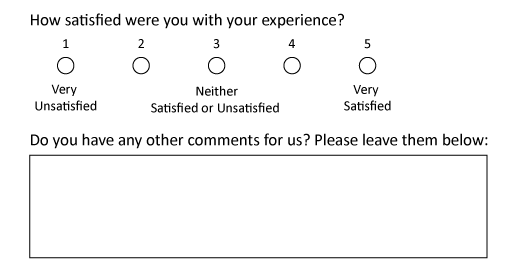

How to Create a Customer Satisfaction Survey

CSAT Scores are most often collated in surveys. Most of the time these are a single question, but often organizations ask a number of satisfaction-related questions to gather more insights.

These questions then form what is commonly known as a “CSAT Survey”.

If you’d like to gather more CSAT insights by creating such a survey, make sure you take on board the advice below.

Asking the Right CSAT Questions

To calculate a CSAT Score, many organizations will ask a number of questions that relate to a customer’s happiness with different parts of the customer journey.

These will all likely be asked using the same scale, making the calculations easier.

Examples of questions that you might like to include in your survey include:

- How satisfied are you with the service provided today?

- How satisfied are you with the advisor that you spoke to today?

- How satisfied are you with our website navigation?

- How satisfied are you with our delivery service?

While you need to be careful in terms of who you survey, to avoid survey fatigue, these questions will give you a good idea of what’s lifting and hurting your overall CSAT Score.

You can also ask more open-ended questions to harness greater insights, although these will not contribute to your score. Examples of these include:

- If you could change one thing about our product/service, what would it be?

- Which of the following product features are most valuable to you?

- Why did you choose our organization over our competitors?

For more ideas for questions to use in your surveys, read our article: The Best Questions to Ask on a Contact Centre Survey

Gathering Survey Data

Businesses have more options than ever before when it comes to surveying customers. Website, phone, IVR – there are numerous options with different benefits for cost and response rates.

Teresa Gandy told us: “The best way to get survey responses is mobile phones. Not SMS, but texting the link to the survey.”

“Don’t email the link, because people open texts more than they open emails. They just press the link and your survey is there. So when people are sat on the bus, sat on the tube, watching TV in the evening, they hit one button to open the survey.”

Find more ways for gathering feedback and CSAT survey responses in our article: 25 Good Customer Feedback Examples

Formatting the Survey

These are a few of the most important considerations for putting together a survey to generate a CSAT Score for your organization.

- Keep It Short, Keep It Simple – The average customer will devote around 90 seconds to completing a survey. That’s enough for a general CSAT question, a question about their specific experience, and an NPS question.

- Use an Odd-Numbered Scale – A good survey should have an odd-numbered scale because an odd number of scale points gives participants a middle-ground or neutral response option.

- Include an N/A Option in the Answers – A major annoyance for customers completing surveys is finding that they are obliged to answer questions which are not relevant to their experience. Offering an N/A or a “I don’t know” option is important for this reason.

- Keep Your Surveys Consistent to Track Change – If you’re changing your survey questions you’re not getting consistency. You might be able to add something in or tweak it slightly, but you’re not going to get long-term quality data if you keep changing what you’re asking.

Our final tip is to use text fields, but don’t make them mandatory. The information that customers volunteer in text fields is often the most useful. However, adding text should be optional, or else many customers will simply give up.

If you wish to use text fields in your survey, it’s best you format question like in the example below.

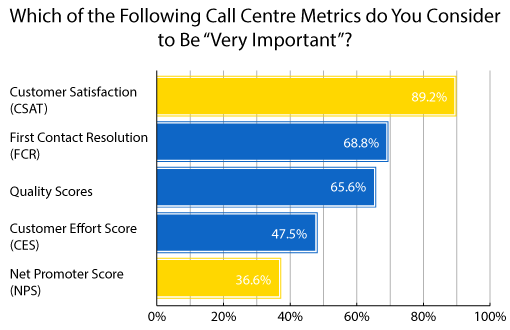

Customer Satisfaction vs Net Promoter Score – What’s the Difference?

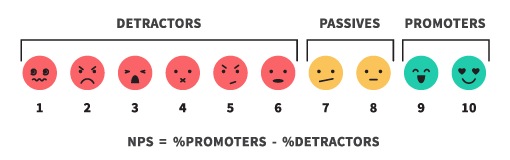

Many contact centres measure a Net Promoter Score (NPS), taking the metric as an indirect indication of customer satisfaction.

However, this is not good practice, especially if you have not proven that there is a correlation between your CSAT and NPS scores.

NPS is a measure of customer loyalty through recommendations and not satisfaction. You can have many satisfied customers, but they may not necessarily recommend you.

One key reason for this is that your business doesn’t necessarily lend itself to recommendations. Not many people would strongly endorse an insurance provider to their family and friends, for example, even though they may well be happy with the service they receive.

This could be why NPS lags in popularity when compared to other customer experience metrics like CSAT – as seen in the chart below.

This chart includes data from our report: What Contact Centres Are Doing Right Now (2019 Edition)

Yet despite these key differences between the metrics, you could perhaps adopt aspects from the methodology of calculating NPS.

How to Calculate NPS Scores

To calculate NPS you ask the question: “On a scale of 1 to 10, how likely are you to recommend our product/service to your family/friends?”

Then you accumulate responses into the equation below:

NPS = %Promoters – %Detractors

If you were to use this scale but change the question to a CSAT question like: “On a scale of 1 to 10, how satisfied were you with the service provided to you today?” You could find some really interesting insights.

In fact, this kind of measurement raises the bar for satisfaction by accepting only the highest scores as satisfied. It also encompasses more than the binary idea of satisfied/unsatisfied.

Just be sure to remember that CSAT and NPS are two very different metrics.

For more on calculating other common contact centre metrics, read our articles:

- How to Calculate First Contact Resolution

- How to Calculate Customer Effort

- How to Measure Employee Engagement

Author: Jonty Pearce

Reviewed by: Rachael Trickey

Published On: 7th Oct 2020 - Last modified: 30th Sep 2025

Read more about - Customer Service Strategy, Customer Feedback, Customer Satisfaction (CSAT), Free Downloads, How to Calculate, Jeff Toister, Metrics, Morris Pentel, Paul Weald, Service Strategy, Teresa Gandy